In recent years, artificial intelligence has entered the world of software development in a quiet but pervasive way. From GitHub Copilot to ChatGPT and Claude, automatic code generation tools promise to speed up every phase of a programmer's work: writing, debugging, documentation, even architecture.

Yet, behind this promise of efficiency lies something more complex. Because what we gain in speed, we often lose in understanding.

The myth of infinite productivity

Every technological era has had its illusion of efficiency. In the '90s it was "one click deploy" frameworks, then "low code" platforms, now AI assistants that complete code before we even understand the context.

The implicit message is always the same: work more, in less time. But writing code isn't just a matter of quantity: it's an act of design, of vision, of balance between logic and creativity.

An algorithm can suggest a syntactically perfect function, but it can never know why that function should exist or whether it's the right solution for the problem we're addressing.

The delegation trap

Many developers report feeling "more productive" thanks to AI. But in reality, what often happens is a delegation of technical thinking.

The AI writes, we check. But the more the machine does, the less the human reflects. And when we stop reflecting, we stop learning.

The risk isn't that AI will take our place: it's that it will make us mentally lazier, more dependent on immediate answers and less capable of building original and simultaneously performant solutions.

Quality that cannot be measured

A software project isn't evaluated only by the number of lines of code produced or the speed of release. It's measured in coherence, readability, and the code's ability to evolve.

And here AI shows its limits: it doesn't know the team's culture, doesn't feel the responsibility of refactoring, doesn't perceive the weight of architectural choices. It generates, but doesn't design.

Ethics, copyright, and responsibility

Then there's a deeper issue: who is responsible for code generated by AI? If a bug causes damage, if a library is used without a license, if a vulnerability arises from an automatic suggestion, is the fault with the model, the developer, or the company that adopted it?

European regulation on artificial intelligence, just entered into force, attempts to answer these questions by introducing the principle of accountability, but technology moves much faster than the law.

A new awareness

Perhaps the real challenge isn't understanding how much AI can help us, but when we should leave it out.

The future of development won't be made of generated code, but of better-thought-out code. AI can free us from noise, but only if we have the discipline not to completely surrender control.

Because true efficiency doesn't come from speed, but from understanding.

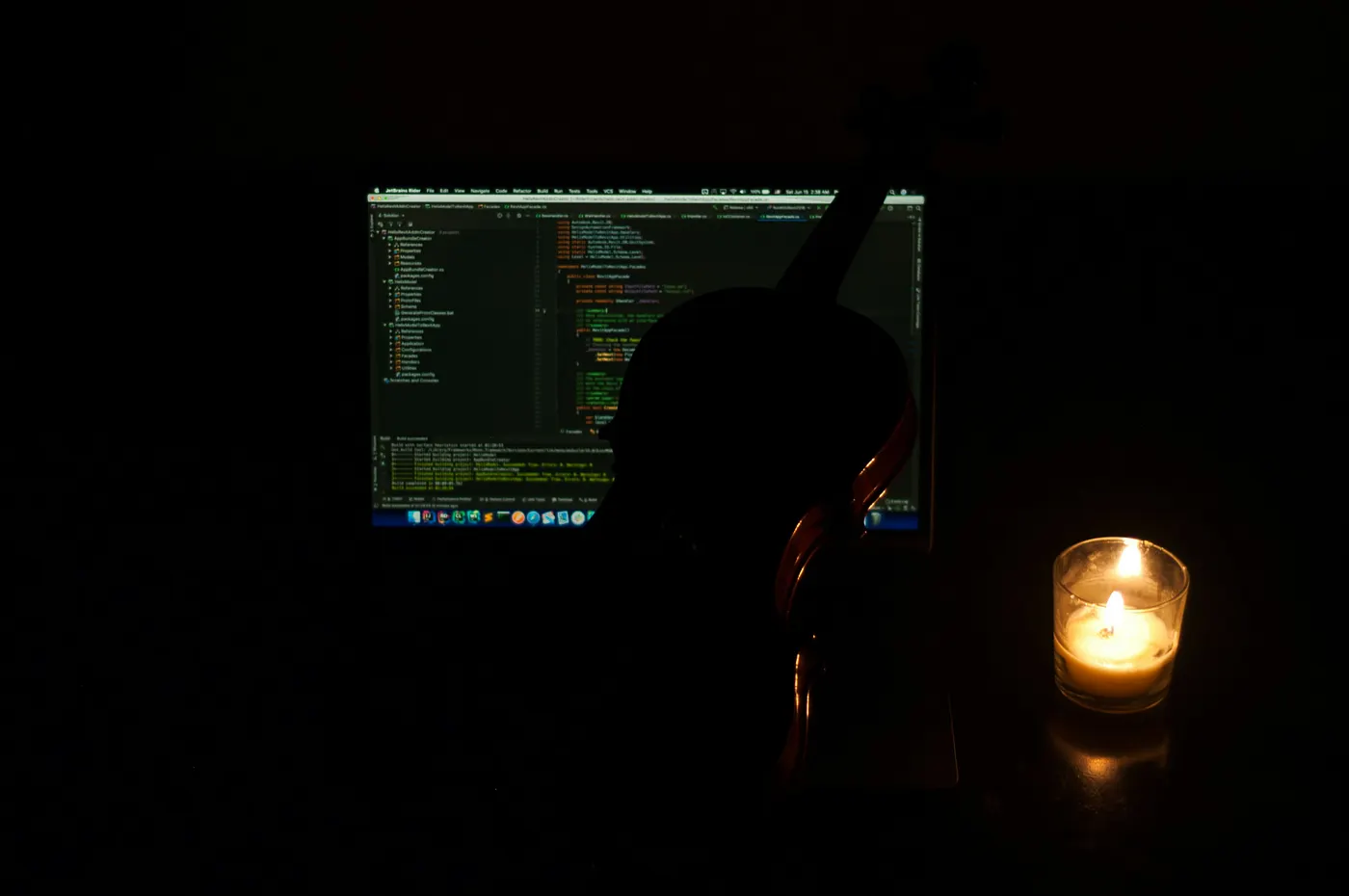

I myself use artificial intelligence to produce code. But the time savings I achieve, if I want to properly instruct my agent (or agents), is often less than what I would get by letting the AI operate autonomously. Of course, I'm certain that I always know what my code does and that I know in detail all third parties libraries and portion of code.

How do you approach this? Let me know if you'd like!